Neural Networks in Games

Creating real-time data driven controllers for digital characters is a daunting task considering the vast quantities of ready to use high quality motion capture data. In part, this is because character controllers have lots of difficult requirements which must be fulfilled for them to be useful. More specifically, they must be able to learn from large quantities of data, they must not require lots of manual pre-processing of data and they must be lightning fast to execute at runtime without requiring a lot of memory.

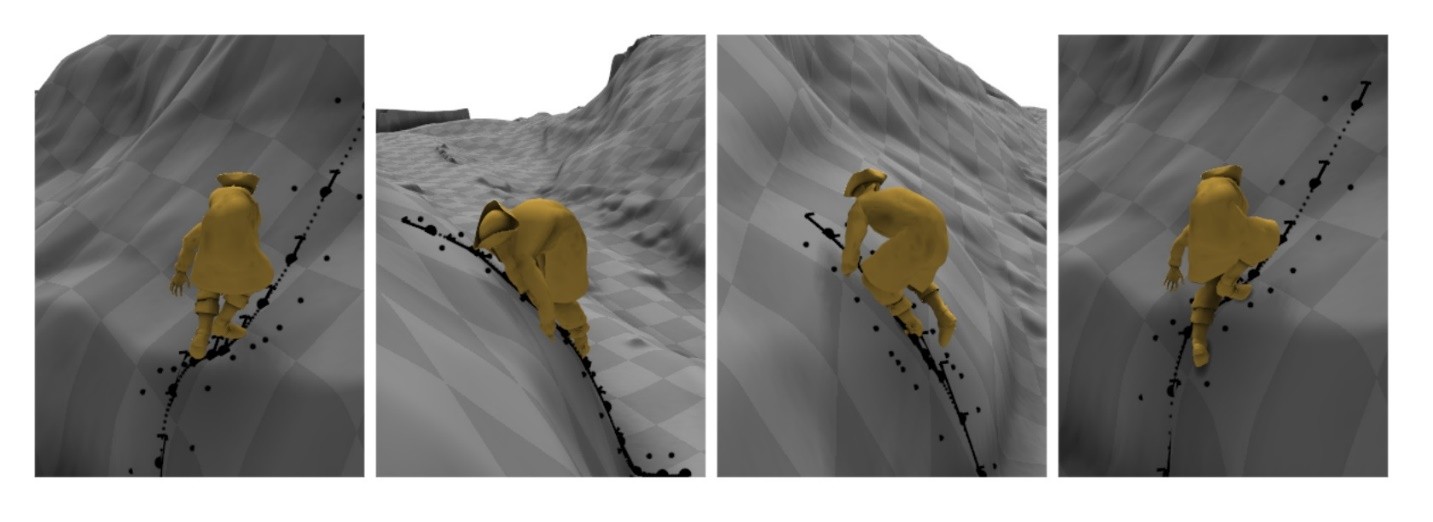

Very often, the game environment is composed of uneven or large obstacles which require characters to perform different stepping, jumping, climbing or avoidance motions to adhere to the instructions of the user as shown below. Such a scenario requires a framework which can learn from high dimensional motion data in high volumes since large combinations of different motion trajectories are involved.

New Developments

Recently, some developments have been made in deep learning and neural networks which have shown some potential for satisfying these requirements. Today neural networks are capable of learning from vast amounts of high dimensional datasets and, once trained, have a fast execution time and a low memory footprint. However, this begs the question, how exactly can neural networks be applied to motion data so that they can produce high quality real-time output with minimal processing. Let’s take a look at how this enhances the graphics in today’s video games and consequently enhances the user experience.

The graphics in modern games are indeed astounding, but the one thing game developers are struggling to portray is the variety and malleability of human motion. In order to make our game characters walk, jump and run smoothly, we will need an animation system that is generated by a neural network description from real time-captured data.

If you have ever played any modern game you most likely have already noticed that many game software developers do this already, but this tedious work is done by developers working from libraries of motions, thinking through all varieties of possible motions. For example, what if the character is waving his arms as he is walking? What if the character is scratching her head as she is walking up the stairs? The possibilities are endless.

Possible Neural Network Solutions

This method, i.e. procedurally generated universes and weapons, has existed in the gaming world before, but put putting them together with convincing animations from wide ranging variable is very difficult. Prior bug-ridden and disjointed attempts are alright if you have something like Ubisoft’s Grow Home but for big-budget blockbusters such as Uncharted 4 this will not cut the mustard.

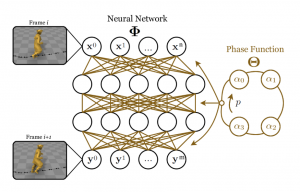

Well, there’s good news. Artificial intelligence development researchers from Method Studios and the University of Edinburgh have created a machine learning system which captures motion clips that show varying types of movements. Then, when the user provides some command, let’s say, “Walk this way” it produces an animation that fits the command and terrain. For example, the character will start walking but it will hop over a small obstacle along the way.

Best of all, there no need to make any custom animations to go from a walk to a hop. The algorithm does everything and produces smooth movement without any discombobulating switches between animation types.

To avoid any wrong motions, researchers add another phase function to the neural network which deters it from accidently mixing different animation types, e.g. taking a step while jumping.

Advantages and Drawbacks

This big data application development method is data-driven, meaning that the character does not merely play back a jump animation, but rather continuously adjusts its movements based on the size of the obstacles. The results are very impressive. The neural net combines pre-scripted animations into jaw dropping lifelike locomotion over varying terrain. Indeed, in the results produced, we see characters ducking, jumping and even putting their arms out for balance when walking along a narrow path, everything is calculated on an as-needed basis.

In technical terms, this means that the system, as input, takes user controls, prior position of the character, scene geometricity and then it automatically generates high quality motions to fulfill the necessary user control. There are some drawbacks. If you would like to re-record something after the fact, you would have to wait through 30 hours of training time after the motion capture. Also, designers cannot easily go in and “touch-up” the neural networks output. Also, unlike with traditional canned animation. Even though the neural networks decisions operate quickly, they eat up a lot more processor time than playing pre-recorded animation.

Another problem is that it only works with simple motions such as running or jumping and it cannot handle complex interactions with the environment i.e. precise hand movements or interacting with other objects in the scene. Moreover, if the terrain is too steep, the animation will appear awkward as well a la The Elder Scrolls V: Skyrim’s mountaineers. In the future, researchers are hoping to develop a feature where characters realistically react to changes in terrain as well as terrain surfaces. This would allow characters to confidently walk and run on different terrains and physical conditions, for example, icy roads, or flimsy rope bridges.

Practical Applications

The application of neural networks and machine learning are changing game software development. Right now, you can feed all of Shakespeare’s sonnets into a computer and it will produce a sonnet that is so close in form and tone to the original, that the layman cannot distinguish which one is which. Let’s apply this to gaming. Let’s say you want to provide users with a new Battlefield experience. All you would have to do is feed every war story you can find into a computer and it would provide users with interesting and personal stories every time they engage.

This is the sort of technology EA announced it was working on at its E3 press conference. They also mentioned that this technology is being designed at their experimental SEED division but did not elaborate much further.

Let’s all keep in mind that the development of neural networks is in their early stages of development and the future seems promising for video game development software if we can harness the powers of neural networks. Groundbreaking research has been done which shows how a neural powered AI could revolutionize how game characters animate realistically through complex game environments in real-time. They have the power to make games more realistic than ever before and provide unlimited potential for developers worldwide. So be sure to keep an eye out for new technologies that make games come alive. This will be an indelible moment in the sphere of game development.